Multi-Setup Depth Perception through Virtual Image Hallucination

ECCV 2024 DEMO

Exhibition Area - Thursday, October 3 9:00 am CEST - 12:30 pm CEST

|

|

|

|

|

|

|

|

|

|

|

|

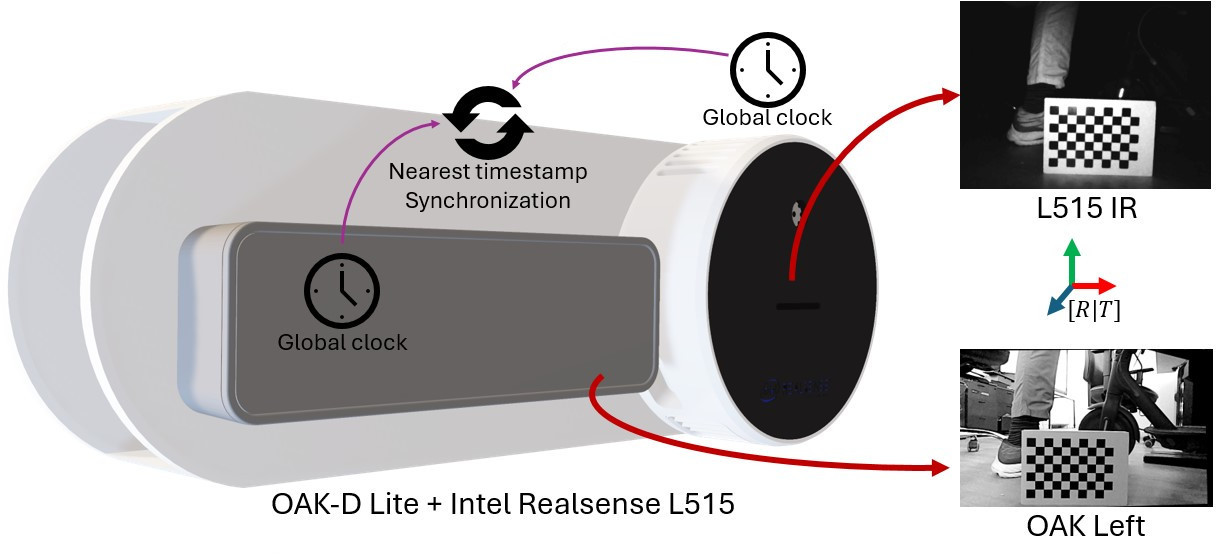

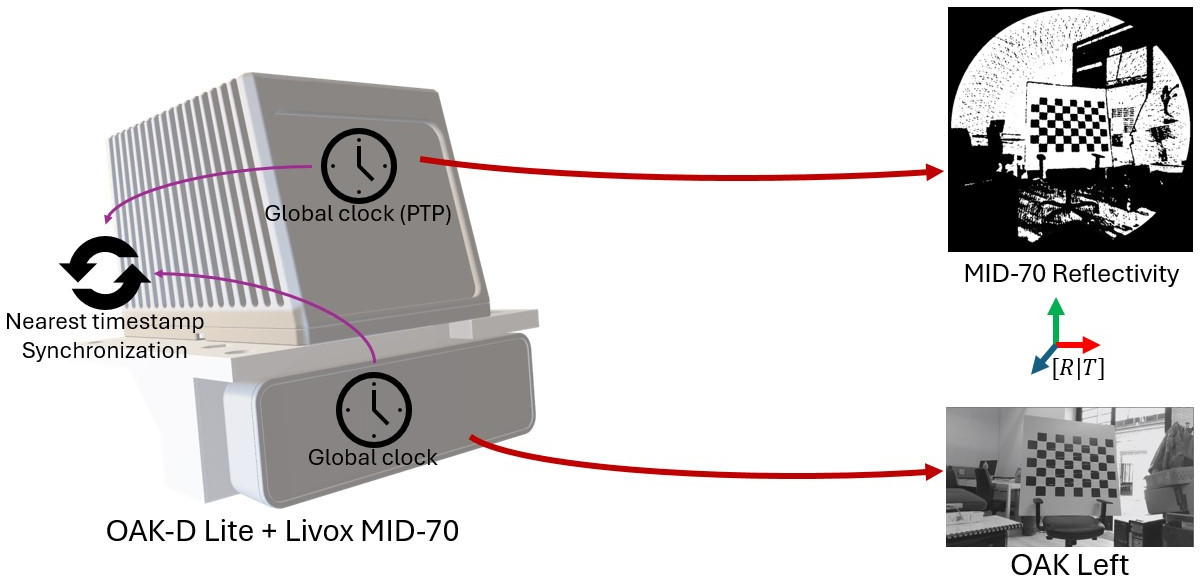

Our prototypes at ECCV 2024. We built two prototypes for indoor/outdoor scenarios composed of an OAK-D lite stereo camera with a built-in Stereo matching algorithm, an Intel RealSense L515 LiDAR as the indoor sparse depth sensor and Livox MID-70 as the indoor/outdoor sparse depth sensor. Sensors are rigidly fixed using a handmade alluminium support to guarantee a stable calibration over time. |

Abstract

|

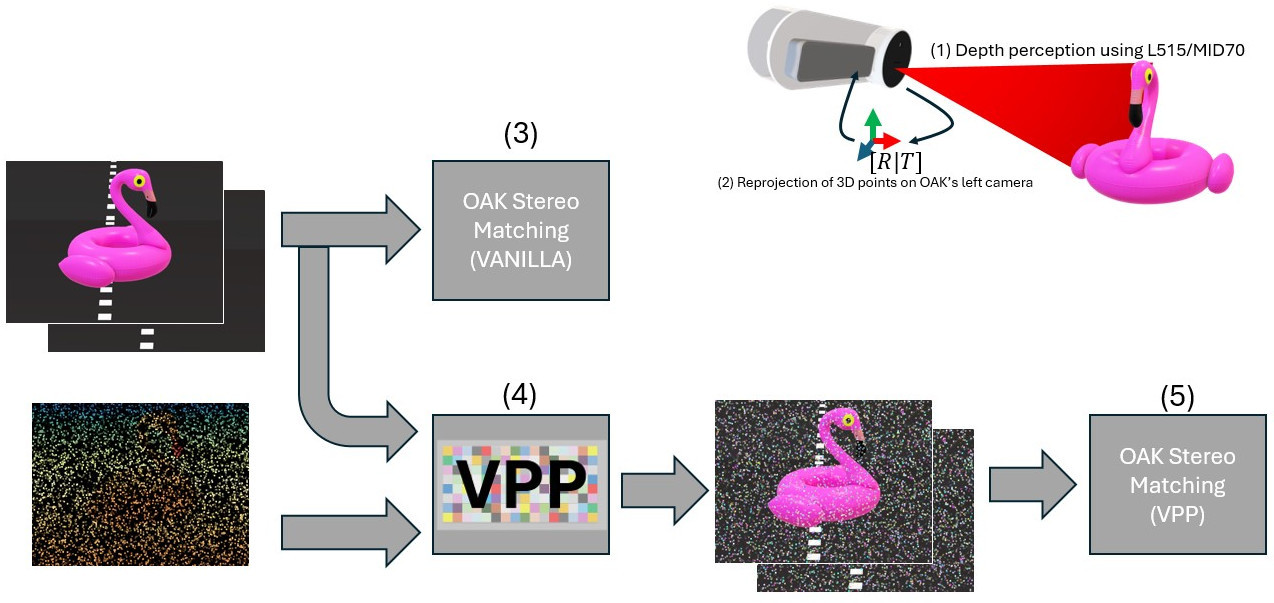

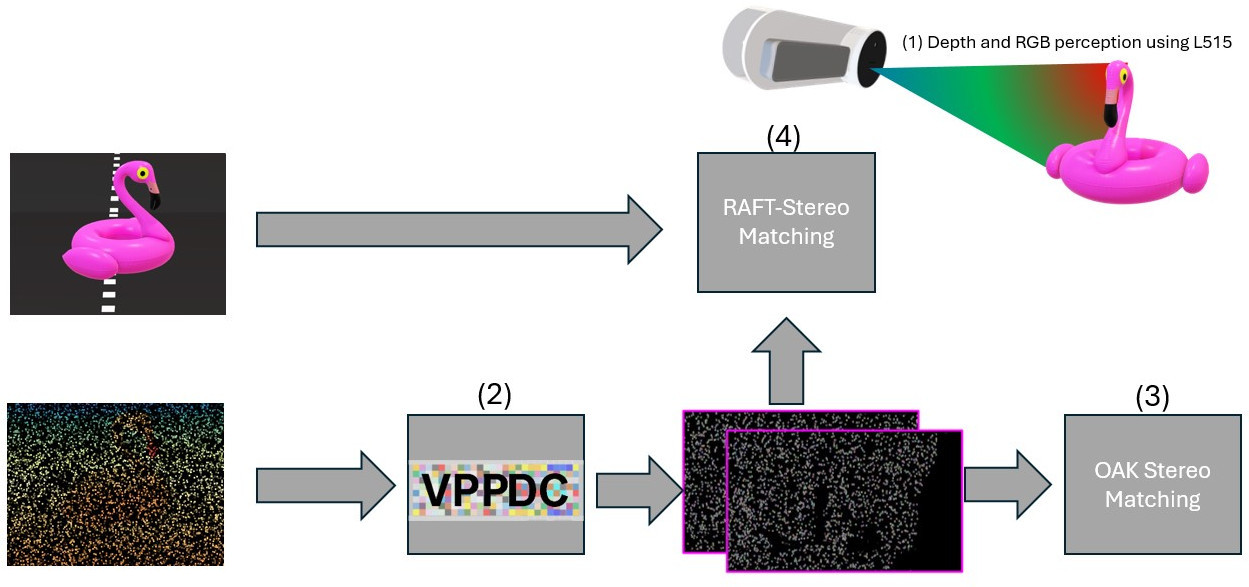

"The demo aims to showcase a novel matching paradigm, proposed at ICCV 2023, based on the hallucination of vanilla input images gathered by standard imaging devices. Its working principle consists of projecting virtual patterns according to the sparse depth points gathered by a depth sensor to enable robust and dense depth estimation at the resolution of the input images. Such a virtual hallucination strategy can be seamlessly used with any algorithm or deep network for stereo correspondence, dramatically improving the performance of the baseline methods. In contrast to active stereo systems based on a conventional pattern projector (e.g., Intel RealSense or OAK-D Pro stereo cameras), our proposal acts on the vanilla RGB images, is effective at any distance, even with sunlight, and does not require additional IR/RGB cameras nor a physical IR pattern projector. Moreover, our virtual projection paradigm is general, and can be applied to other tasks, such as depth completion, as proposed at 3DV 2024, and more recently to Event-Stereo Matching, as proposed at ECCV 2024. In the demo, with multiple setups, we will showcase to the ECCV community how flexible and effective the virtual pattern projection paradigm is through a real-time demo based on off-the-shelf cameras and depth sensors. Specifically, we will present our proposal with different algorithms and networks through a live real-time session on a standard notebook connected to a commercial stereo camera (e.g., OAK-D camera) and multiple depth sensors (e.g. RealSense LiDAR L515 and Livox MID-70)." |

The Prototypes

|

|

|

|

|

|

|

Video

|

|

BibTeX

@InProceedings{Bartolomei_2023_ICCV,

author = {Bartolomei, Luca and Poggi, Matteo and Tosi, Fabio and Conti, Andrea and Mattoccia, Stefano},

title = {Active Stereo Without Pattern Projector},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {18470-18482}

}@misc{bartolomei2024stereodepth,

title={Multi-Setup Depth Perception through Virtual Image Hallucination},

author={Luca Bartolomei and Matteo Poggi and Fabio Tosi and Andrea Conti and Stefano Mattoccia},

year={2024},

eprint={2406.04345},

archivePrefix={arXiv},

primaryClass={cs.CV}

}@inproceedings{bartolomei2024revisiting,

title={Revisiting depth completion from a stereo matching perspective for cross-domain generalization},

author={Bartolomei, Luca and Poggi, Matteo and Conti, Andrea and Tosi, Fabio and Mattoccia, Stefano},

booktitle={2024 International Conference on 3D Vision (3DV)},

pages={1360--1370},

year={2024},

organization={IEEE}

}@inproceedings{bartolomei2024lidar,

title={LiDAR-Event Stereo Fusion with Hallucinations},

author={Bartolomei, Luca and Poggi, Matteo and Conti, Andrea and Mattoccia, Stefano},

booktitle={European Conference on Computer Vision (ECCV)},

year={2024},

}Acknowledgements

This study was carried out within the MOST - Sustainable Mobility National Research Center and received funding from the European Union Next-GenerationEU - PIANO NAZIONALE DI RIPRESA E RESILIENZA (PNRR) - MISSIONE 4 COMPONENTE 2, INVESTIMENTO 1.4 - D.D. 1033 17/06/2022, CN00000023. This manuscript reflects only the authors' views and opinions, neither the European Union nor the European Commission can be considered responsible for them.